Saturday 11 July 2020

Introducing Minuit

By hub, Saturday 11 July 2020 at 15:13 :: Gnome

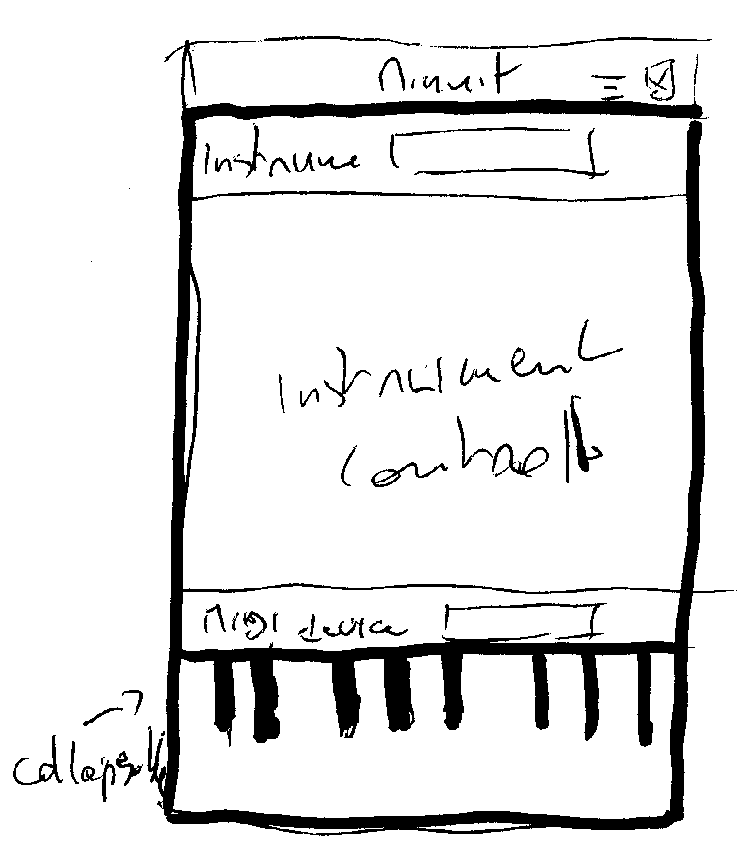

It all started with a sketch on paper (beware of my poor penmanship).

I was thinking how I can build a musical instrument in software. Nothing new here actually, there are even applications like VMPK that fit the bill. But this is also about learning as I have started to be interested in music software.

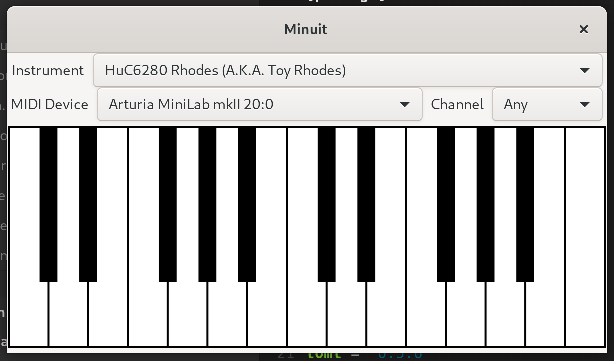

This is how Minuit is born. The name come from a mix of a play on word where, in French, Minuit is midnight and MIDI is midday. MIDI of course is the acronym Musical Instrument Digital Interface, which is the technology at the heart of computer aided music. Also minuit sounds a bit like minuet which is a dance of social origin.

I have several goals here:

- learn about MIDI: The application can be controlled using MIDI.

- learn about audio: Of course you have to output audio, so now is the best time to learn about it.

- learn about music synthesis: for now I use existing software to do so. The first instrument was ripped off Qwertone, to produce a simple tone, and the second is just Rhodes toy piano using soundfonts.

- learn about audio plugins: the best way to bring new instruments is to use existing plugins, and there is a large selection of them that are libre.

Of course, I will use Rust for that, and that means I will have to deal with the gaps found in the Rust ecosystem, notably by interfacing libraries that are meant to be used from C or C++.

I also had to create a custom widget for the piano input, and for this I basically rewrote in Rust a widget I found in libgtkmusic that were written in Vala. That allowed me to be refine my tutorial on subclassing Gtk widgets in rust.

To add to the learning, I decided to do everything in Builder instead of my usual Emacs + Terminal combo, and I have to say it is awesome! It is good to start using tools you are not used to (ch, ch, changes!).

In end the first working version look like this:

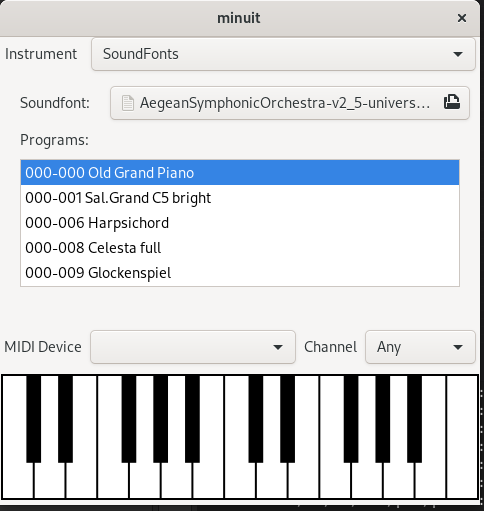

But I didn't stop here. After some weeks off doing other things, I resumed. I was wondering, since I have a soundfont player, if I could turn this into a generic soundfont player. So I got to this:

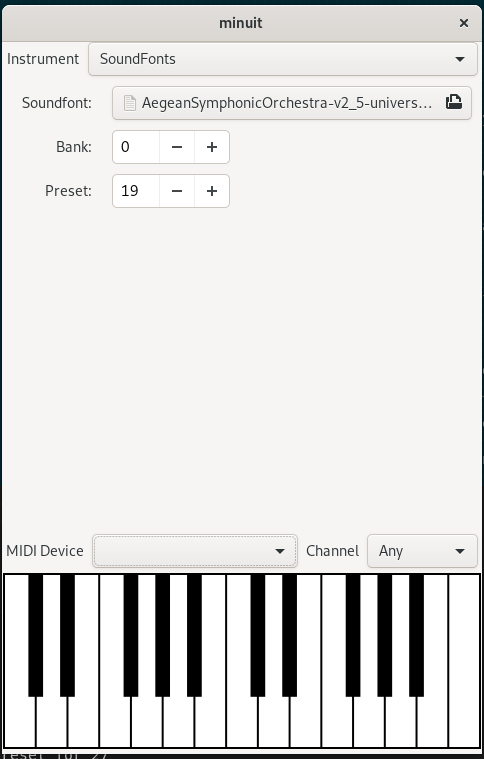

Then iterated on the idea, learning about banks and preset for soundfont, which is straight off MIDI, and made the UI look like this:

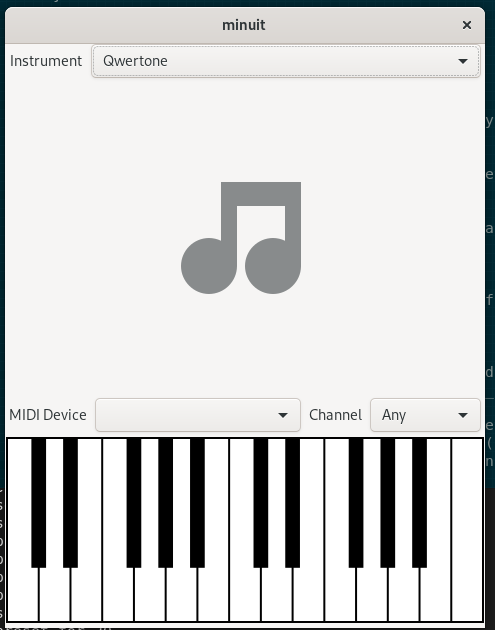

Non configurable instruments have a placeholder:

There is no release yet, but it is getting close to the MVP. I am still missing an icon.

My focus forward will be:

- focus on user experience. Make this a versatile musical instrument, for which the technology is a mean not an end.

- focus on versatility by bringing more tones. I'd like to avoid the free form plugins but rather integrated plugins into the app, however leveraging that plugin architecture whenever possible.

- explore other ideas in the musical creativity.